Well-played? More like a major strategic blunder. It makes all Chinese companies to seek out and/or nuture domestic suppliers which due to economic reasons were not doingNah, not really desperation. This is just another well-played move in the grand scheme of the Chinese-US trade war, dressed like a punitive response to a sole company's violation of sanction rules.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Trade War with China

- Thread starter Ultra

- Start date

- Status

- Not open for further replies.

SinoSoldier

Colonel

Well-played? More like a major strategic blunder. It makes all Chinese companies to seek out and/or nuture domestic suppliers which due to economic reasons were not doing

Seeking out domestic suppliers already puts their products two steps behind those of Apple/Samsung/etc. because the SoCs from Chinese suppliers are not as capable as Western-built chips. Nobody is saying that the Chinese won't invest in their domestic chip industry in the long run (by which the US ban would've expired), but on a short-term outlook, this move will be devastating to the company affected by it (and the results are already showing).

plawolf

Lieutenant General

What doesn’t kill you makes you stronger. Chinese tech firms have been lazy and complacent in sourcing off the shelf chips and components instead of doing the hard work of properly investing in domestic supply.

In the short term, ZTE’s fortunes will dip, but it, and all the other major Chinese tech companies, should now have all the impetus they need to make the costly investments needed to start getting Chinese domestic chips and other critical component suppliers up and running and create enough demand to make them a genuine world class competitor with enough economies of scale to play in the same league as the established big boys.

The US commerce department has done more to help China’s domestic chip fab industry with this move than the Chinese government has managed previously.

Beijing has been going slow and steady for fear that significant,’rapid

Moves might alarm America and widen the trade deficit. But now it doesn’t need to worry too much about appearances and can just forge on at full speed.

In the short term, ZTE’s fortunes will dip, but it, and all the other major Chinese tech companies, should now have all the impetus they need to make the costly investments needed to start getting Chinese domestic chips and other critical component suppliers up and running and create enough demand to make them a genuine world class competitor with enough economies of scale to play in the same league as the established big boys.

The US commerce department has done more to help China’s domestic chip fab industry with this move than the Chinese government has managed previously.

Beijing has been going slow and steady for fear that significant,’rapid

Moves might alarm America and widen the trade deficit. But now it doesn’t need to worry too much about appearances and can just forge on at full speed.

Hendrik_2000

Lieutenant General

Seeking out domestic suppliers already puts their products two steps behind those of Apple/Samsung/etc. because the SoCs from Chinese suppliers are not as capable as Western-built chips. Nobody is saying that the Chinese won't invest in their domestic chip industry in the long run (by which the US ban would've expired), but on a short-term outlook, this move will be devastating to the company affected by it (and the results are already showing).

Where did you get this idea other than your own prejudice and ignorant read this

The problem is can HiSilicone ramp up production to meet demand? Or is Huawei willing to sell Kirin to their competitor But the government will push Huawei to share technology with ZTE if the need arise. I guess it serve ZTE well they are too lazy and only think in the short term profit by depending on the outside supplier Now they reap their bitter fruit

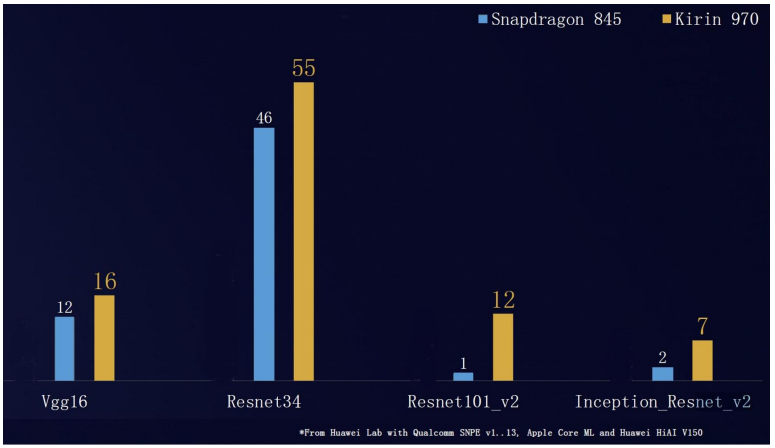

Why the Kirin 970 NPU is faster than the Snapdragon 845

5 DAYS AGO

1.6K

As creeps its way into our smartphone experience, SoC vendors have been racing to improve neural network and performance in their chips. Everyone has a different take on how to power these emerging use cases, but the general trend has been to include some sort of dedicated hardware to accelerate common machine learning tasks like image recognition. However, the hardware differences mean that chips offer varying levels of performance.

What is the Kirin 970’s NPU? – Gary explains

Last year it emerged that HiSilicon’s in a number of image recognition benchmarks. Honor recently published its own tests revealing claiming the chip performs better than the newer Snapdragon 845 as well.

We’re a little skeptical of the results when a company tests its own chips, but the benchmarks Honor used (Resnet and VGG) are commonly used pre-trained image recognition neural network algorithms, so a performance advantage isn’t to be sniffed at. The company claims up to a twelve-fold boost using its HiAI SDK versus the Snapdragon NPE. Two of the more popular results show between a 20 and 33 percent boost.

Regardless of the exact results, this raises a rather interesting question about the nature of neural network processing on smartphone SoCs. What causes the performance difference between two chips with similar machine learning applications?

DSP vs NPU approaches

The big difference between Kirin 970 vs Snapdragon 845 is HiSilicon’s option implements a Neural Processing Unit designed specifically for quickly processing certain machine learning tasks. Meanwhile, Qualcomm repurposed its existing Hexagon DSP design to crunch numbers for machine learning tasks, rather than adding in extra silicon specifically for these tasks.

With the Snapdragon 845, Qualcomm boasts up to tripled performance for some AI tasks over the 835. To accelerate machine learning on its DSP, Qualcomm uses its Hexagon Vector Extensions (HVX) which speeds up 8-bit vector math commonly used by machine learning tasks. The 845 also boasts a new micro-architecture that doubles 8-bit performance over the previous generation. Qualcomm’s Hexagon DSP is an efficient math crunching machine, but it’s still fundamentally designed to handle a wide range of math tasks and has been gradually tweaked to boost image recognition use cases.

The Kirin 970 also includes a DSP (a Cadence Tensilica Vision P6) for audio, camera image, and other processing. It’s in roughly the same league as Qualcomm’s Hexagon DSP, but it is not currently exposed through the HiAI SDK for use with third-party machine learning applications.

The Hexagon 680 DSP from the Snapdragon 835 is a multi-threaded scalar math processor. It’s a different take compared to mass matrix multiple processors for Google or Huawei.

HiSilicon’s NPU is highly optimized for machine learning and image recognition, but is not any good for regular DSP tasks like audio EQ filters. The NPU is a designed in collaboration with Cambricon Technology and primarily built around multiple matrix multiply units.

You might recognize this as the same approach that Google took with its hugely powerful machine learning chips. Huawei’s NPU isn’t as huge or powerful as Google’s server chips, opting for a small number of 3 x 3 matrix multiple units, rather than Google’s large 128 x 128 design. Google also optimized for 8-bit math while Huawei focused on 16-bit floating point.

The performance differences come down to architecture choices between more general DSPs and dedicated matrix multiply hardware.

The key takeaway here is Huawei’s NPU is designed for a very small set of tasks, mostly related to image recognition, but it can crunch through the numbers very quickly — allegedly up to 2,000 images per second. Qualcomm’s approach is to support these math operations using a more conventional DSP, which is more flexible and saves on silicon space, but won’t quite reach the same peak potential. Both companies are also big on the heterogeneous approach to efficient processing and have dedicated engines to manage tasks across the CPU, GPU, DSP, and in Huawei’s case its NPU too, for maximum efficiency.

Qualcomm sits on the fence

So why is Qualcomm, a high-performance mobile application processor company, taking a different approach to HiSilicon, Google, and Apple for its machine learning hardware? The immediate answer is probably that there just isn’t a meaningful difference between the approaches at this stage.

Sure, the benchmarks might express different capabilities, but the truth there isn’t a must-have application for machine learning in smartphones right now. Image recognition is moderately useful for organizing photo libraries, optimizing camera performance, and unlocking a phone with your face. If these can be done fast enough on a DSP, CPU, or GPU already, it seems there’s little reason to spend extra money on dedicated silicon. LG is even doing real-time camera scene detection using a Snapdragon 835, which is very similar to Huawei’s camera AI software using its NPU and DSP.

Qualcomm's DSP is widely used by third-parties, making it easier for them to start implementing machine learning on its platform.

In the future, we may see the need for more powerful or dedicated machine learning hardware to power more advanced features or save battery life, but at the moment the use cases are limited. Huawei might change its NPU design as the requirements of machine learning applications change, which could mean wasted resources and an awkward decision about whether to continue supporting outdated hardware. An NPU is also yet another bit of hardware third-party developers have to decide whether or not to support.

A closer look at Arm’s machine learning hardware

Qualcomm also has a history of dismissing novel or niche ideas only to quickly adopt similar technologies of its own once the market moves in that direction. Cast your minds back to the company dismissing 64-bit mobile application processors as a gimmick.

Qualcomm may well go down the dedicated neural network processor route in the future, but only if the use cases make the investment worthwhile. Arm’s recently announced Project Trillium hardware is certainly a possible candidate if the company doesn’t want to design a dedicated unit in-house from scratch, but we’ll just have to wait and see.

Last edited:

Hendrik_2000

Lieutenant General

Huawei’s Kirin 970 Outscores Qualcomm Snapdragon 845 In Test

December 24, 2017 - Written By

Huawei’s HiSilicon Kirin 970 system-on-chip outscored the Qualcomm-made Snapdragon 845 in some synthetic benchmarks by approximately seven percent, according to the screenshots seen below which recently started circulating on Chinese social media platform Weibo. The difference between the two isn’t massive and is possibly even smaller than the newly uncovered results suggest seeing how they only show a couple of individual tests and not the average of a larger number of results. Likewise, synthetic benchmark scores are hardly indicative of real-world performance, especially in the context of such small margins.

With hardware only being part of the equation, it’s up to original equipment manufacturers to optimize their smartphones and the software running on them in a manner that takes maximum advantage of their silicon of choice. Still, the spotted benchmark results indicate that Huawei’s semiconductor subsidiary is now at the very least on par with Qualcomm in regards to the pure computational power of their flagship chips. The Kirin 970 is described by the Chinese tech giant as artificial intelligence SoC, being equipped with a Neural Processing Unit solely dedicated to machine learning and general AI applications. Qualcomm’s Snapdragon 845 also places a large focus on AI and will undoubtedly enjoy a much wider adoption than the Kirin 970 that’s only expected to power mobile devices from Huawei and its subsidiary Honor. Several such offerings like the Huawei Mate 10 Pro and Honor V10 have already been announced and more should be introduced come 2018, starting with the P11 lineup. On the other hand, the Snapdragon 845 should power the vast majority of Android flagships released over the course of the next year, including premium products from Samsung, Sony, LG, and Xiaomi. In overall, the Snapdragon 845 should be at least as popular as its direct predecessor which already powers well .

The semiconductor industry still won’t be moving away from the 10nm process node in 2018 but a jump to the 7nm is expected in early 2019, being pioneered by TSMC that’s also said to be the manufacturer of the Snapdragon 855 and replace Samsung as Qualcomm’s chip partner. Samsung’s foundry business is skipping the commercialization of the regular 7nm standard and is instead focusing on delivering one that takes advantage of extreme ultraviolet (EUV) lithography, industry sources said earlier this month, suggesting that Qualcomm will return to Samsung for the Snapdragon 865 that’s expected to be commercialized in 2020.

December 24, 2017 - Written By

Huawei’s HiSilicon Kirin 970 system-on-chip outscored the Qualcomm-made Snapdragon 845 in some synthetic benchmarks by approximately seven percent, according to the screenshots seen below which recently started circulating on Chinese social media platform Weibo. The difference between the two isn’t massive and is possibly even smaller than the newly uncovered results suggest seeing how they only show a couple of individual tests and not the average of a larger number of results. Likewise, synthetic benchmark scores are hardly indicative of real-world performance, especially in the context of such small margins.

With hardware only being part of the equation, it’s up to original equipment manufacturers to optimize their smartphones and the software running on them in a manner that takes maximum advantage of their silicon of choice. Still, the spotted benchmark results indicate that Huawei’s semiconductor subsidiary is now at the very least on par with Qualcomm in regards to the pure computational power of their flagship chips. The Kirin 970 is described by the Chinese tech giant as artificial intelligence SoC, being equipped with a Neural Processing Unit solely dedicated to machine learning and general AI applications. Qualcomm’s Snapdragon 845 also places a large focus on AI and will undoubtedly enjoy a much wider adoption than the Kirin 970 that’s only expected to power mobile devices from Huawei and its subsidiary Honor. Several such offerings like the Huawei Mate 10 Pro and Honor V10 have already been announced and more should be introduced come 2018, starting with the P11 lineup. On the other hand, the Snapdragon 845 should power the vast majority of Android flagships released over the course of the next year, including premium products from Samsung, Sony, LG, and Xiaomi. In overall, the Snapdragon 845 should be at least as popular as its direct predecessor which already powers well .

The semiconductor industry still won’t be moving away from the 10nm process node in 2018 but a jump to the 7nm is expected in early 2019, being pioneered by TSMC that’s also said to be the manufacturer of the Snapdragon 855 and replace Samsung as Qualcomm’s chip partner. Samsung’s foundry business is skipping the commercialization of the regular 7nm standard and is instead focusing on delivering one that takes advantage of extreme ultraviolet (EUV) lithography, industry sources said earlier this month, suggesting that Qualcomm will return to Samsung for the Snapdragon 865 that’s expected to be commercialized in 2020.

Equation

Lieutenant General

Yeah that's what the British thought and said before and now look at them. You can not reverse a downfall with a push of button and than everything will be honky dory again without any effort or sacrifice going into it. And that's required a great leadership in all sector of government and society to be strong enough to make tough smart decisions and to see things through. History has shown that already. I don't see that happening in America whether in the White House or in Capitol Hill.Population. Even if all the developing nations of this world are stunningly successful in furthering their development while maintaining social cohesion and so go on to achieve similar wealth and power as first world nations, the United States will still remain amongst the most powerful of nations by virtue of being larger than all but three of them. In the absence of civil war and national collapse, or an economic-military revolution from abroad akin to that which enabled the age of European imperialism, or 'hand of god' events such as plague, eruption of Yellowstone, nuclear annihilation, etc. the United States will remain a great power for the foreseeable future.

Equation

Lieutenant General

Yeah at the same time you also deter any future deals by others as well. The world also gets to see how the US behaves and therefore will go to the other super guys to make deals instead. An example is the China lead AIIB bank system. Remember when the US advised all the other nations NOT to join for fear of giving China more say and status of power in dictating world economy? Well guest what happen, they didn't listen or care.That's usually how precedents are set to deter future cases of illicit conduct. Huawei & other Chinese tech companies are next if they don't abide by US sanctions or if Washington wishes to pull another trump card in its trade spat with Beijing.

Hendrik_2000

Lieutenant General

Tariff has no impact on Chinese steel and aluminium export

China April aluminium, steel exports rise, defying U.S. tariffs

l

* Aluminium exports rise slightly to 451,000 t in April

* Steel exports up 14.7 pct from March, highest since Aug 2017

* U.S. tariffs not having material impact on aluminium - analyst (Adds analyst comment on steel)

By Tom Daly and Muyu Xu

BEIJING, May 8 (Reuters) - China's aluminium exports inched higher and steel shipments jumped in April, customs data showed on Tuesday, as U.S. import tariffs failed to dent overseas shipments from the world's biggest producer of the two metals.

The United States imposed a 25 percent duty on steel imports and a 10 percent tariff on aluminium imports, effective from March 23 as U.S. President Donald Trump sought to protect U.S. metal makers.

The impact of the tariffs on aluminium was offset by U.S. sanctions on giant Russian producer Rusal that caused a spike in international prices, prompting Chinese firms to send more metal abroad, analysts said.

"Exports are still happening, which suggests the tariff is not really having a material impact at this point," said Lachlan Shaw, a metals analyst at UBS in Melbourne.

"Certainly anecdotal reporting from within the U.S. aluminium products manufacturing sector suggests that some fabricators are just paying the tariffs to secure the required imports. So it appears that trade is holding up for now," he added.

China's unwrought aluminium and aluminium product exports came in at 451,000 tonnes last month, up 0.2 percent from a revised 450,000 tonnes in March and up 4.9 percent from 430,000 tonnes in April 2017, the General Administration of Customs said.

Steel exports jumped 14.7 percent from March to 6.48 million tonnes, their highest level since August last year, and steady with a year ago.

April has one less day than March, so the latest increases are greater on a daily basis.

The United States accounts for around 14 percent of China's aluminum exports, but only 1 percent of its steel exports.

Washington imposed sanctions on Rusal, the world's second-biggest aluminium producer, on April 6, causing London Metal Exchange aluminium prices to climb 12.5 percent last month on fears of a supply shortage.

Shanghai aluminium prices rose only 4.8 percent in April in a well supplied Chinese market, with the wider price arbitrage making Chinese exports more profitable.

The jump in steel exports came despite the tariffs and a 7.6 percent jump in Shanghai rebar prices in April, making Chinese steel more expensive.

"Exports went up while the steel price rallied and there was firm demand in China, which would mean steel output in China really went up a lot," said Xu Bo, an analyst at Haitong Futures.

April was the first full month after the end of winter restrictions on industrial output in northern China, including on steel and aluminium, although some key steel cities still have curbs in place.

For more details, see (Reporting by Tom Daly and Muyu Xu; additional reporting by Melanie Burton in MELBOURNE; editing by Richard Pullin)

China April aluminium, steel exports rise, defying U.S. tariffs

l

* Aluminium exports rise slightly to 451,000 t in April

* Steel exports up 14.7 pct from March, highest since Aug 2017

* U.S. tariffs not having material impact on aluminium - analyst (Adds analyst comment on steel)

By Tom Daly and Muyu Xu

BEIJING, May 8 (Reuters) - China's aluminium exports inched higher and steel shipments jumped in April, customs data showed on Tuesday, as U.S. import tariffs failed to dent overseas shipments from the world's biggest producer of the two metals.

The United States imposed a 25 percent duty on steel imports and a 10 percent tariff on aluminium imports, effective from March 23 as U.S. President Donald Trump sought to protect U.S. metal makers.

The impact of the tariffs on aluminium was offset by U.S. sanctions on giant Russian producer Rusal that caused a spike in international prices, prompting Chinese firms to send more metal abroad, analysts said.

"Exports are still happening, which suggests the tariff is not really having a material impact at this point," said Lachlan Shaw, a metals analyst at UBS in Melbourne.

"Certainly anecdotal reporting from within the U.S. aluminium products manufacturing sector suggests that some fabricators are just paying the tariffs to secure the required imports. So it appears that trade is holding up for now," he added.

China's unwrought aluminium and aluminium product exports came in at 451,000 tonnes last month, up 0.2 percent from a revised 450,000 tonnes in March and up 4.9 percent from 430,000 tonnes in April 2017, the General Administration of Customs said.

Steel exports jumped 14.7 percent from March to 6.48 million tonnes, their highest level since August last year, and steady with a year ago.

April has one less day than March, so the latest increases are greater on a daily basis.

The United States accounts for around 14 percent of China's aluminum exports, but only 1 percent of its steel exports.

Washington imposed sanctions on Rusal, the world's second-biggest aluminium producer, on April 6, causing London Metal Exchange aluminium prices to climb 12.5 percent last month on fears of a supply shortage.

Shanghai aluminium prices rose only 4.8 percent in April in a well supplied Chinese market, with the wider price arbitrage making Chinese exports more profitable.

The jump in steel exports came despite the tariffs and a 7.6 percent jump in Shanghai rebar prices in April, making Chinese steel more expensive.

"Exports went up while the steel price rallied and there was firm demand in China, which would mean steel output in China really went up a lot," said Xu Bo, an analyst at Haitong Futures.

April was the first full month after the end of winter restrictions on industrial output in northern China, including on steel and aluminium, although some key steel cities still have curbs in place.

For more details, see (Reporting by Tom Daly and Muyu Xu; additional reporting by Melanie Burton in MELBOURNE; editing by Richard Pullin)

- Status

- Not open for further replies.