I was jst curios. Bcoz she "re-signed" an almost like "bri" doc w CN, just wthout the "bri" label right?Also Open AI was banned 2 years ago...

...but then re-enabled after a month or so.

This is just because the regulators think their job is to block AI apps, it's their way to show they are alive and doing something to earn their monthly wages...so when they have these rare occasions they jump in...there's not a lot more to read unfortunately.

But in the case of DeepSeek I have doubts it will be re-enabled as was the case with Open AI. The difference is that Open AI saves user data in US, while DS in China. So for an European country this is not the same thing.

This woman she is quite cunning and deceiving, she twisted on a dime from "Biden is my hero" to Trump. Now Trump is her lighthouse, if you ask her about Biden, she replies "Biden, who?". What that answer means is that she will not oppose Trump taking Greenland...but of course, because Italy is part of EU, she will never say this openly.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Artificial Intelligence thread

- Thread starter 9dashline

- Start date

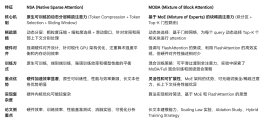

Kimi seems to be in sync with DeepSeek. Last time, he released Kimi 1.5 at the same time as DeepSeek. After DeepSeek released the NSA technology to improve the efficiency of long text processing yesterday, Kimi also released a similar technology MOBA a few hours later. And at the same time, the code for training and reasoning was released.

github.com/MoonshotAI/MoBA

Similarities between the two:

1. Both use a sparse attention mechanism, that is, not all tokens need to pay attention to each other, but selectively pay attention to some tokens to reduce the amount of calculation.

2. Both operate based on the concept of blocks, dividing long texts into multiple blocks, and performing sparse selection or calculation in blocks.

3. Both are compatible with the Transformer architecture and can be used as a substitute for the standard attention mechanism and embedded in the existing Transformer model.

github.com/MoonshotAI/MoBA

Similarities between the two:

1. Both use a sparse attention mechanism, that is, not all tokens need to pay attention to each other, but selectively pay attention to some tokens to reduce the amount of calculation.

2. Both operate based on the concept of blocks, dividing long texts into multiple blocks, and performing sparse selection or calculation in blocks.

3. Both are compatible with the Transformer architecture and can be used as a substitute for the standard attention mechanism and embedded in the existing Transformer model.

Attachments

Aside from saying DeepSeek force them to raise their game. Baidu said 22% of search right now includes AI generated content. They are doing real changes to basic search content. So yeah, they need to deeply integrate DeepSeek is their platform罗戎:目前,约 22% 的搜索结果页面包含人工智能生成的内容,但幕后还有更多的工作在进行,我们正在从根本上对搜索服务进行了变革,使其比以往任何时候都更强大、更高效。就像李彦宏在事先准备好的发言中提到的,借助文心一言,我们将搜索范围从文本和链接扩展到提供多样化的内容形式,包括短视频、直播、智能助理笔记和商品展示。这些不同的形式可以动态组合,创造出个性化的搜索体验。在持续改革搜索产品的过程中,我们也在开发能实现更深度个性化的功能,以适应每位用户的习惯和偏好。

Tencent came out with their own Hunyuan-T1 model which can also do deep search

Looks like in Tencent Yuanbao, now you can choose between the 2 for web searching.腾讯元宝已成为「AI 聚合体」,用户进入对话界面,可自由切换模型,并免费使用。IT之家整理腾讯元宝特性如下:

- 接入 DeepSeek-R1 671B「满血版」,同时接入腾讯混元自研深度思考模型 T1

- 全面支持联网搜索(含混元 T1、DeepSeek),支持从互联网信源、微信公众号 / 视频号等腾讯生态内容获取信息

- 使用元宝 / 元宝小程序提问时,支持从微信上传文件、一键解析

This is bad for Baidu.

Silicon Flow had several hundred million RMB pre-A round of funding.

Zhejiang university has started full blooded R1 version. It uses the 西湖之光 compute power.

Looks like Tshinghua university fine tuned a version of R1 for learning.

Tomorrow marks One Month since DeepSeek R1 was released and I think its fair to say that at least for Chinese domestic AI its been the second coming of the Cambrian Explosion... I don't in the history of mankind and certainly in China's own 5000+ year history has anything been adopted so quicklyDeepseek r1 weights released...

This is like dropping an atomic warhead on OpenAI

Mother of God this one is stronk... beats o1 medium....

Turns out this is the real "AI Diffusion" (not Biden's) and the fact that they choose to release this on the morning of Trumps inauguration, right around the "StarGate" reveal, and their recent breakthrough paper underlined it was taking on the "NSA" lol (intentional play on words) and probably for the massive DDoS attacks to try and suppress DeekSeek worldwide usage etc .... I don't think any of this was coincidental....

Well, there was also the android moment when all the Chinese OEMs quickly adopted to using android to commoditize the market.Tomorrow marks One Month since DeepSeek R1 was released and I think its fair to say that at least for Chinese domestic AI its been the second coming of the Cambrian Explosion... I don't in the history of mankind and certainly in China's own 5000+ year history has anything been adopted so quickly

Turns out this is the real "AI Diffusion" (not Biden's) and the fact that they choose to release this on the morning of Trumps inauguration, right around the "StarGate" reveal, and their recent breakthrough paper underlined it was taking on the "NSA" lol (intentional play on words) and probably for the massive DDoS attacks to try and suppress DeekSeek worldwide usage etc .... I don't think any of this was coincidental....

That was very impressive for that time.

Grok 3 pulled a Volkswagen moment. They hit the benchmark then gave users a more crappy version. Although this could also be a face saving made up reason.

AI CUDA is a good idea. Need AI MindSpore to match them.

AI CUDA is a good idea. Need AI MindSpore to match them.

Last edited:

Grok 3 pulled a Volkswagen moment. They hit the benchmark then gave users a more crappy version. Although this could also be a face saving made up reason.

According to their own blogpost they sort of gamed the benchmark by using cons@64 (consensus result after 64 tries) while the other models are only given a single try. AIME benchmark is only 30 questions so it basically only gets about 1 more question correct than r1 and does about 1 question worse than o3 mini high.

According to their own blogpost they sort of gamed the benchmark by using cons@64 (consensus result after 64 tries) while the other models are only given a single try. AIME benchmark is only 30 questions so it basically only gets about 1 more question correct than r1 and does about 1 question worse than o3 mini high.

Musk used about 120x more GPUs than DeepSeek yet their Grok is only marginally better than R1..... wtf?!?

Seems like raw brute forcing hit a hard wall of diminishing returns

Probably also why we hadnt had a new GPT version since 2023. And Claude 3.5 OPUS is still nowhere to be found