The ship when the US could prevent the emergence of China as a peer competitor in high-tech has long since sailed. I don't think US think-tankers have quite understood this. Now the main challenge for the US is to merely maintain their position.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

News on China's scientific and technological development.

- Thread starter Quickie

- Start date

The Shenzhou-14 mission uses new technology that greatly improves quality of the realtime video feed from the crew capsule.

"Unlike the space station, the crew capsule does not have a high bandwidth network connection with the ground. The quality of the video feed from the crew capsule used to be poor due to the low resolution at only 352x288."

"A team of researchers from the University of Science and Technology of China, led by Professor Wu Feng, was tasked to solve the problem. Based on the non-uniform rate-distortion theory for multimedia (非均匀率失真理论) established by Prof. Wu, the team developed a "deep learning video compression and resolution enhancement technology" (深度学习压缩视频超分辨率增强技术). This new technology is able to lift the video resolution by 16 times to 1920x1080. The ratio of signal-to-noise is also increased by more than 4db."

"The researchers then created a realtime system for enhancing stream media which can process up to 25 frames per second, with the end-to-end latency below 1 second. This system has been used in the Shenzhou-14 crew capsule to support video feed in higher definition."

Professor Wu Feng the 2021 IEEE CAS Mac Van Valkenburg Award.

News release in English on the award and Prof. Wu by USTC:

New report in Chinese:

"Unlike the space station, the crew capsule does not have a high bandwidth network connection with the ground. The quality of the video feed from the crew capsule used to be poor due to the low resolution at only 352x288."

"A team of researchers from the University of Science and Technology of China, led by Professor Wu Feng, was tasked to solve the problem. Based on the non-uniform rate-distortion theory for multimedia (非均匀率失真理论) established by Prof. Wu, the team developed a "deep learning video compression and resolution enhancement technology" (深度学习压缩视频超分辨率增强技术). This new technology is able to lift the video resolution by 16 times to 1920x1080. The ratio of signal-to-noise is also increased by more than 4db."

"The researchers then created a realtime system for enhancing stream media which can process up to 25 frames per second, with the end-to-end latency below 1 second. This system has been used in the Shenzhou-14 crew capsule to support video feed in higher definition."

合肥6月9日电(记者 吴兰)记者9日从中国科学技术大学获悉,神舟十四号载人飞船返回舱图像首次使用了该校吴枫教授课题组研制的流媒体图像质量增强系统,显著提升了图像的清晰度和画质。

神舟载人飞船返回舱是航天员在飞船发射、交会对接以及返回地面阶段需要乘坐的飞船舱。与在轨的空间站不同,返回舱和地面之间的通信链路资源极其有限,传统的视频通信技术严重影响了返回舱图像的分辨率和画质。在神舟十三号及以前的飞船中,返回舱图像的有效分辨率仅为352×288,难以适应目前高分辨率、大屏显示的画面要求。

吴枫课题组在2021年11月接到需求后,组织人员开展科研攻关。基于他们所建立的非均匀率失真理论,提出了深度学习压缩视频超分辨率增强技术,将图像的分辨率提升16倍以上至1920×1080,图像峰值信噪比提高4分贝以上。

课题组进一步研制了支持实时流媒体处理的图像增强系统,系统处理速度达到25帧每秒,端到端处理时延小于1秒。该系统用于返回舱和地面之间的视频通信中提升图像的清晰度和画质。系统应用后,主观感受视频画质有显著提升,在4K以上的大屏显示更加明显。

目前,图像增强系统已在神舟十四号载人飞船的待发段、发射段、上升段和交会对接段全程使用,后期能够用于神舟十四号载人飞船的返回段以及神舟系列载人飞船的后续任务。

Professor Wu Feng the 2021 IEEE CAS Mac Van Valkenburg Award.

News release in English on the award and Prof. Wu by USTC:

IEEE Circuits and Systems Society (CAS) presented the 2021 IEEE CAS Mac Van Valkenburg Award to Professor WU Feng for his “contributions in multimedia non-uniform coding and communications” on May 24, 2021. This is the first time that the prestigious Award is presented to a scholar from Chinese Mainland.

Professor WU Feng is an expert in the field of media streaming. He has conducted research on theory, key technologies, standardization, and industrial applications of media streaming for more than 20 years. He established the non-uniform rate-distortion theory for multimedia, solved several key technical problems of efficient media compression and adaptive media transmission, and promoted the development in the field of media streaming in China. He has authored 2 books, more than 180 journal papers, and has been granted more than 150 patents.

WU’s technical proposals have been adopted into MPEG-4, H.264, H.265, among other international video compression standards. He is the chair of the IEEE Data Compression Standard Committee. His contributions made our nation achieve important breakthrough in the competition of international video coding standards. He led the development of a real-time H.264 codec, which was adopted as a component of the Windows 7 media kit that represented the state of the art and was installed more than 450 million times. He developed the first H.265 media streaming system based on 4G (LTE), and the H.265 hardware codec later. The H.265 hardware codec has been adopted into smartphones, and the chip shipment volume reaches 100 million. His developed technologies are also widely used in industrial products/services such as Tencent Meeting.

New report in Chinese:

2021年5月24日,IEEE电路与系统(CAS)学会将2021年度IEEE CAS Mac Van Valkenburg奖授予中国科学技术大学吴枫教授,以表彰他对“多媒体非均匀编码和通信的贡献”,这是该奖项历史上首次颁发给中国大陆的学者。

the team developed a "deep learning video compression and resolution enhancement technology"

is this kinda like nvidia's dlss?

Sorry I don't know. I know nothing about the Nvidia stuff. The news report also lacks the details to compare.is this kinda like nvidia's dlss?

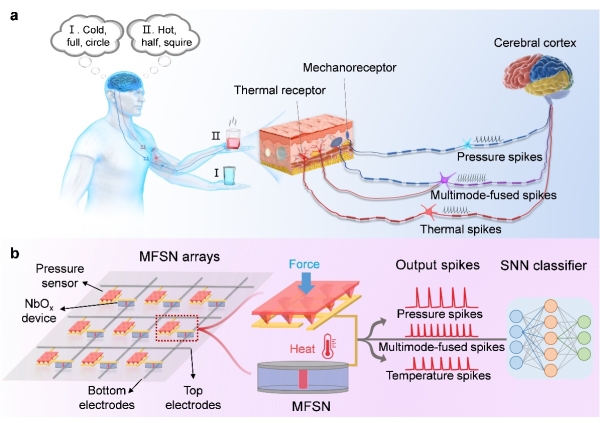

A Heterogeneously Integrated Spiking Neuron Array for Multimode-Fused Perception and Object Classification

Multimode-fused sensing in the somatosensory system helps people obtain comprehensive object properties and make accurate judgments. However, building such multisensory systems with conventional metal–oxide–semiconductor technology presents serious device integration and circuit complexity challenges. Here, a multimode-fused spiking neuron (MFSN) with a compact structure to achieve human-like multisensory perception is reported. The MFSN heterogeneously integrates a pressure sensor to process pressure and a NbOx-based memristor to sense temperature. Using this MFSN, multisensory analog information can be fused into one spike train, showing excellent data compression and conversion capabilities. Moreover, both pressure and temperature information are distinguished from fused spikes by decoupling the output frequencies and amplitudes, supporting multimodal tactile perception. Then, a 3 × 3 MFSN array is fabricated, and the fused frequency patterns are fed into a spiking neural network for enhanced tactile pattern recognition. Finally, a larger MFSN array is simulated for classifying objects with different shapes, temperatures, and weights, validating the feasibility of the MFSNs for practical applications. The proof-of-concept MFSNs enable the building of multimodal sensory systems and contribute to the development of highly intelligent robotics.

微电子所在多模态神经形态感知方面取得重要进展

近日,微电子所刘明院士团队和复旦大学刘琦教授团队共同研发了一种结构紧凑的多模态融合感知脉冲神经元(MFSN)阵列,该阵列由异质集成的压力传感器和NbOx忆阻器构成(图1b),其中压力传感器用来感知压力,NbOx忆阻器用来产生脉冲输出并感知温度变化。当压力和温度两种激励同时作用于MFSN时,多模态的模拟感觉信息可以融合为一个脉冲序列,显示出优异的数据压缩和脉冲转换能力。此外,通过解耦输出脉冲的频率和振幅,还可从融合信号中获得独立的压力和温度信息,支持了神经元对于单模态信息的保真度和多模态感知能力。团队进一步将MFSN阵列与脉冲神经网络(SNN)结合构建了一种人工多模态感知系统,成功地模拟了人体躯体感觉系统中的多模态信息(温度和压力)感知和多模态物体(不同温度、重量和形状的物体)的分类能力。该工作使构建高效的多模态脉冲感知系统成为可能,为发展高智能机器人技术提供了新思路。

图1.生物躯体感觉系统与人工体躯体感觉系统。(a)人手感知杯子的温度、重量和水杯形状的示意图。(b)由MFSN阵列和SNN分类器组成的人工躯体感觉系统模拟触觉感知的示意图。

The same team mentioned in previous post published another paper to report their work on

Published paper in English:

News release in Chinese by CAS:

图1 SOM原理图及其基于忆阻器阵列的实现。(a)SOM网络原理图。(b)忆阻器的典型I-V曲线。(c)128×64 1T1R忆阻器阵列光学实物图。(d)1T1R忆阻器阵列实现2D-SOM的原理图

图2 忆阻器基SOM系统的应用。(a)图像处理(分割);(b)求解组合优化问题(TSP问题)。

Implementing in-situ self-organizing maps with memristor crossbar arrays for data mining and optimization

Published paper in English:

A self-organizing map (SOM) is a powerful unsupervised learning neural network for analyzing high-dimensional data in various applications. However, hardware implementation of SOM is challenging because of the complexity in calculating the similarities and determining neighborhoods. We experimentally demonstrated a memristor-based SOM based on Ta/TaOx/Pt 1T1R chips for the first time, which has advantages in computing speed, throughput, and energy efficiency compared with the CMOS digital counterpart, by utilizing the topological structure of the array and physical laws for computing without complicated circuits. We employed additional rows in the crossbar arrays and identified the best matching units by directly calculating the similarities between the input vectors and the weight matrix in the hardware. Using the memristor-based SOM, we demonstrated data clustering, image processing and solved the traveling salesman problem with much-improved energy efficiency and computing throughput. The physical implementation of SOM in memristor crossbar arrays extends the capability of memristor-based neuromorphic computing systems in machine learning and artificial intelligence.

News release in Chinese by CAS:

微电子所在忆阻器基神经形态计算方向取得新进展

自组织映射网络(SOM,图1a),又称“Kohone网络”,是一种受大脑拓扑结构启发的功能强大的无监督学习神经网络。相比经典的多维尺度或主成分分析等线性算法,SOM具有更强大的数据聚类能力,在语言识别、文本挖掘、财务预测和医学诊断等聚类和优化问题方面展现出独特的优势。但基于传统CMOS硬件实现SOM受到计算相似性和确定邻域的复杂性的限制,且存在电路结构复杂、能量面积开销大、缺乏对相似度的精确计算等问题。如何构建简洁、高效、精确的SOM硬件仍然是一大挑战。忆阻器作为一种新型可编程非易失存储器件,其交叉阵列结构具有支持并行计算和存内计算的天然优势,为SOM的硬件实现提供了新途径。

近日,微电子所刘明院士团队和复旦大学刘琦教授团队利用忆阻器阵列(图1b&c)构建SOM网络中的权值矩阵,首次实现了高效的SOM硬件系统。为解决SOM中神经元和输入特征数量增加时硬件系统复杂度加剧的问题,团队提出了一种新型的多附加行忆阻器阵列架构(图1d),该架构将忆阻器阵列分为两个部分,一部分作为数据行存储权值信息,另一部分作为附加行存储权值的平方和。输入向量和权值向量之间的相似性可以通过一步读操作实现,且不需要归一化权值。基于该硬件系统,团队成功演示了数据聚类、图像分割、图像压缩等应用并成功用于解决组合优化问题(图2)。实验结果表明,在不影响成功率或准确度的基础上,与CMOS系统相比,该系统具有更高的能源效率和计算吞吐量。此外,由于其非监督的特点,应用场景更加丰富,更加迎合现实生活的需求,为忆阻器基智能硬件的构建开辟一条新途径。

图1 SOM原理图及其基于忆阻器阵列的实现。(a)SOM网络原理图。(b)忆阻器的典型I-V曲线。(c)128×64 1T1R忆阻器阵列光学实物图。(d)1T1R忆阻器阵列实现2D-SOM的原理图

图2 忆阻器基SOM系统的应用。(a)图像处理(分割);(b)求解组合优化问题(TSP问题)。

That domain is called super resolution (SR) and the main goal is to achieve high-quality upscaling of images\videos\etc., DLSS is SR over game textures. So yes, this algorithm and DLSS are from the same general domain but I don't think it is correct to say that it is "kinda like" DLSS since the areas of application are different. Plus, the article mentions compression, hence I think that this algorithm probably works as follows - the video capturing device records in HQ, then it gets compressed with a lossy algorithm, sent to Earth, then decompressed and SR is used to make up for the lost information during compression, i.e. it is more of a multi-step algorithm rather than just upscaling network. Or it could be more straightforward - the video is recorded in low quality to be as light-weight as possible, then SR upscaling is used to enhance its quality. However, the second variant is going to produce overall worse video quality than the first one - either the video is generally going to be more noisy and less detailed OR the SR network is going to introduce unnatural artifacts (this is a common problem for these kind of methods). So I think they probably employ the first approach.is this kinda like nvidia's dlss?

Chinese scientists make breakthrough in push toward intelligent robotics

By Global Times Published: Jun 09, 2022 10:49 PM

Photo: The Institute of Microelectronics (IME) of the Chinese Academy of Sciences

Chinese scientists have developed a multimode-fused spiking neuron (MFSN) array that can sense different shapes, temperatures and weights just like people’s multisensory perception. It can contribute to the development of highly intelligent robotics in future.

Conducted by research teams from the Institute of Microelectronics (IME) of the Chinese Academy of Sciences and Fudan University, the research has been published in the material science academic journal Advanced Materials.

According to the research, the MFSN can achieve human-like multisensory perception, which helps people ascertain the properties of objects and make accurate judgments. The findings can be used for practical applications, further enabling the building of multimodal sensory systems and contributing to the development of highly intelligent robotics in the future.

CHINA PUBLISHES THE MOST DETAILED MAP OF THE MOON EVER MADE

WHO DOESN'T WANT TO KNOW EVERY NOOK AND CRANNY OF THE MOON?

There's now a map of the Moon's surface more detailed than any that came before it.Published in , researchers from the Chinese Academy of Sciences, the country's Institute of Geochemistry, and other organizations compiled known information about the Moon's surface in a recent study that includes this wildly-detailed map of the lunar terrain.

As the study notes, the scale of the map is an incredibly precise 1:2,500,000 scale that highlights all known rocks, craters, basins, and structures on the Moon's surface.

supercat

Colonel

China made a break-through in direct-injection hydrogen engine for heavy commercial fuel-cell electric vehicles, something unimaginable in the era of internal combustion engines.