New DeepSeek data format

This is something that went a bit under the radar, but IMHO is a very interesting part.

What it means?

A model parameter/weight is a number, no more no less. DeepSeek has 671B parameters, it means it works by processing input across a huge number of big tables called matrices, each one with millions of parameters / numbers.

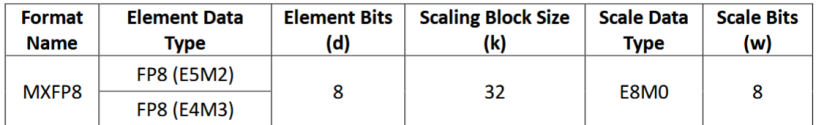

Now, how a computer represents a number? It can use a 16 bit format like fp16 where each number is stored in 16 bits, or a fp8 where each number is stored in 8 bits.

It can store the numbers as integers like in int8 or as

floating point numbers as in fp8. Floating point it means that a number N is represented as a power of 2 multiplied by a fractional part:

N = s * m * 2^e, where e = exponent, m = mantissa, s = sign (-1 or 1)

View attachment 158942

In the picture above the orange part stores the exponent, the green the mantissa, so E4M3 it means 4 bits for the exponent and 3 for mantissa + 1 (the blue one) for the sign for a total of 8 bits -> FP8

So how is done the

UE8M0 FP8 used by DeepSeek?

It is a 8 bit floating point number with

all the 8 bits used for exponent, no mantissa. It is like you can only represent powers of 2, so for instance

exponent

1 (01) -> corresponds to 2^

1 = 2

exponent

2 (10) -> corresponds to 2^

2 = 4

exponent

3 (11) -> corresponds to 2^

3 = 8

Now suppose you want to perform a multiplication:

2 * 4 = 8

In this

UE8M0 format, 2 corresponds to exponent 1, 4 corresponds to 2 and 8 corresponds to 3. So

2 * 4 = 8 corresponds to summing the exponents 1 + 2 = 3

In this format

multiplication can be implemented with a sum, and instead of the costly hardware multiply circuit a much simpler adder circuit can be used!

The above example is not a single case, this is called

logarithmic number system and rely on the property that log(a*b) = log(a)+log(b)

Because in the models the biggest operation by far is matrix multiplication, this trick could simplify a lot the hardware.