While is this perfectly fine with doing research, involving OpenAI does not seem wise in business terms, as majority of the sensitive information should be kept locally on the secured hardwares.

A new symbolic memory framework, ChatDB, was proposed by Zhao Xing's research group at the Institute of Cross-Information Research

Tsinghua News, June 29th. Recently, researchers from the research group of Assistant Professor Zhao Xing of the Institute of Interdisciplinary Information, Tsinghua University and their cooperative units proposed a new symbolic memory framework, ChatDB, which breaks through the previously commonly used memory framework for storage. Imprecise information operation, lack of structure in the form of historical information storage and other limitations.

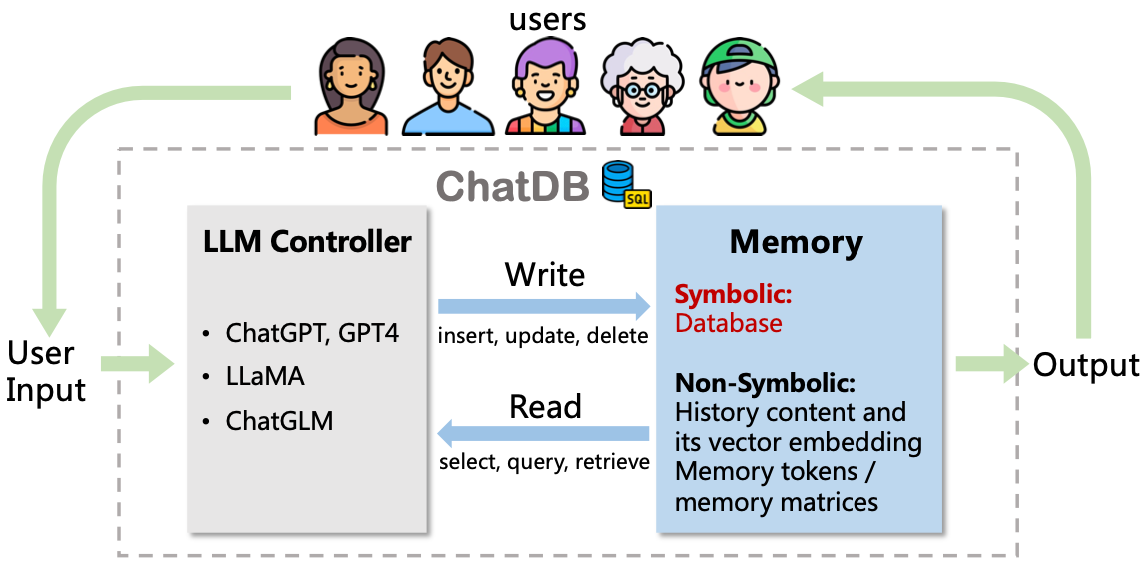

Figure 1. ChatDB workflow diagram

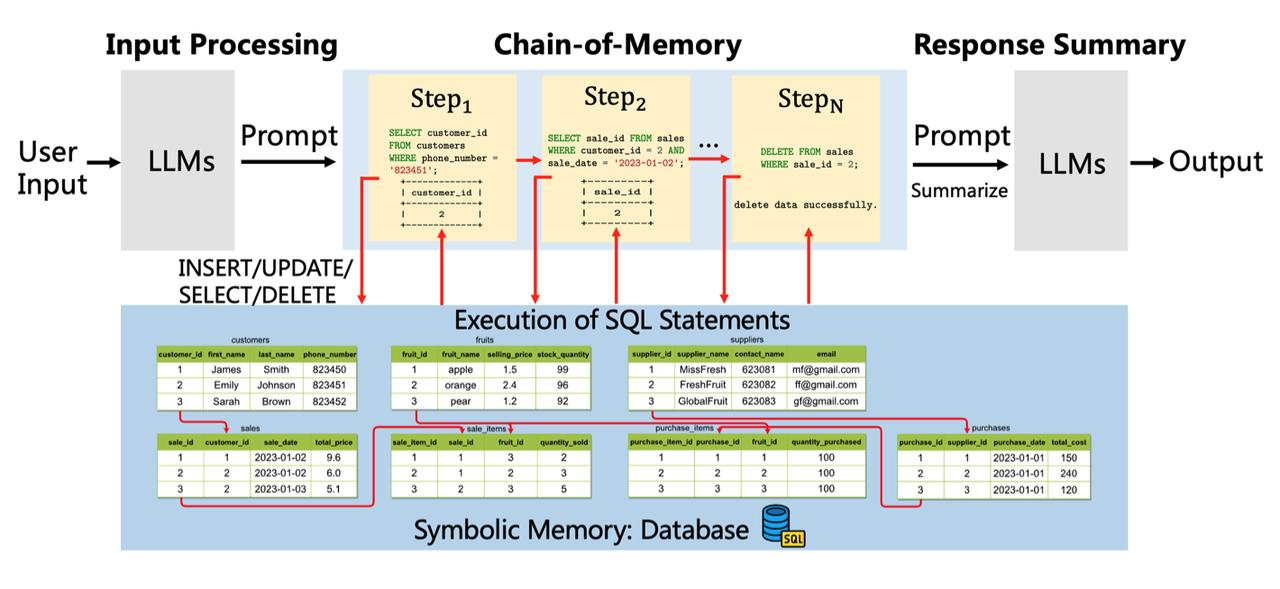

ChatDB consists of a large language model (such as ChatGPT) and a database, which can use symbolic operations (ie SQL instructions) to achieve long-term and accurate recording, processing and analysis of historical information, and help respond to user needs. Its framework consists of three main stages: input processing, chain-of-memory, and response summary. In the first stage, LLMs process user input requirements, and directly generate replies for commands that do not involve the use of database memory modules; and generate a series of SQL statements that can interact with database memory modules for commands that involve memory modules. In the second stage, the memory chain performs a series of intermediate memory operations and interacts with symbolic memory modules. ChatDB performs operations such as insert, update, select, and delete in sequence according to the previously generated SQL statements. The external database executes the corresponding SQL statement, updates the database and returns the result. Before executing each memory operation, ChatDB will decide whether to update the current memory operation according to the results of previous SQL statements. In the third stage, the language model synthesizes the results obtained by interacting with the database, and makes a summary reply to the user's input.

Figure 2. ChatDB framework overview

In order to verify the effectiveness of using the database as a symbolic memory module in ChatDB to enhance the effectiveness of large language models, and to make quantitative comparisons with other models, the researchers constructed a synthetic dataset of fruit shop operations and management, and named it "Fruit Shop Dataset”, which contains 70 store records generated in chronological order, with about 3300 tokens (less than ChatGPT’s maximum context window length of 4096). These records contain four common operations for fruit shops: purchase, sale, price adjustment, and return. The LLM module in the ChatDB model uses ChatGPT (GPT-3.5 Turbo), the temperature parameter is set to 0, and the MySQL database is used as its external symbolic memory module. The baseline model for comparison is ChatGPT (GPT-3.5 Turbo), the maximum context length is 4096, and the temperature parameter is also set to 0. The researchers conducted experiments on the fruit shop question answering dataset and found that ChatDB showed significant advantages in answering these questions compared to ChatGPT.

Recently, the achievement was published on ArXiv of Cornell University in the paper " ChatDB: Augmenting LLMs with Databases as Their Symbolic Memory " ( ChatDB: Augmenting LLMs with Databases as Their Symbolic Memory ) .

The co-first authors of the paper are Hu Chenxu, a doctoral student at the Institute of Interdisciplinary Information, Tsinghua University, and Fu Jie, a researcher at Zhiyuan Research Institute. The corresponding authors are Fu Jie and Zhao Xing, an assistant professor at the Institute of Interdisciplinary Information. Luo Simian, and Assistant Professor Zhao Junbo of Zhejiang University.

Thus I want to share this repo which I use myself as well, it supports popular self hosted LLMs (ChatGLM, Vicuna, etc.) and is develop by Chinese developers

however I would expect this project to display less performence on SQL processing.

Introduction

DB-GPT creates a vast model operating system using and offers a large language model powered by . In addition, we provide private domain knowledge base question-answering capability through LangChain. Furthermore, we also provide support for additional plugins, and our design natively supports the Auto-GPT plugin.Is the architecture of the entire DB-GPT shown in the following figure:

The core capabilities mainly consist of the following parts:

- Knowledge base capability: Supports private domain knowledge base question-answering capability.

- Large-scale model management capability: Provides a large model operating environment based on FastChat.

- Unified data vector storage and indexing: Provides a uniform way to store and index various data types.

- Connection module: Used to connect different modules and data sources to achieve data flow and interaction.

- Agent and plugins: Provides Agent and plugin mechanisms, allowing users to customize and enhance the system's behavior.

- Prompt generation and optimization: Automatically generates high-quality prompts and optimizes them to improve system response efficiency.

- Multi-platform product interface: Supports various client products, such as web, mobile applications, and desktop applications.